TL;DR

LLMs such as ChatGPT and Bard do not use entities (natural language “building blocks”) to generate content. So, it might not come as a surprise to learn that entities are often the missing element when using AI to produce SEO content. Most AI-generated content is unlikely to succeed in search, specifically because the output from most LLMs does not properly connect the underlying entities that an article needs to cover to be authoritative around a topic.

The AI Tool SEO comparison study is in!

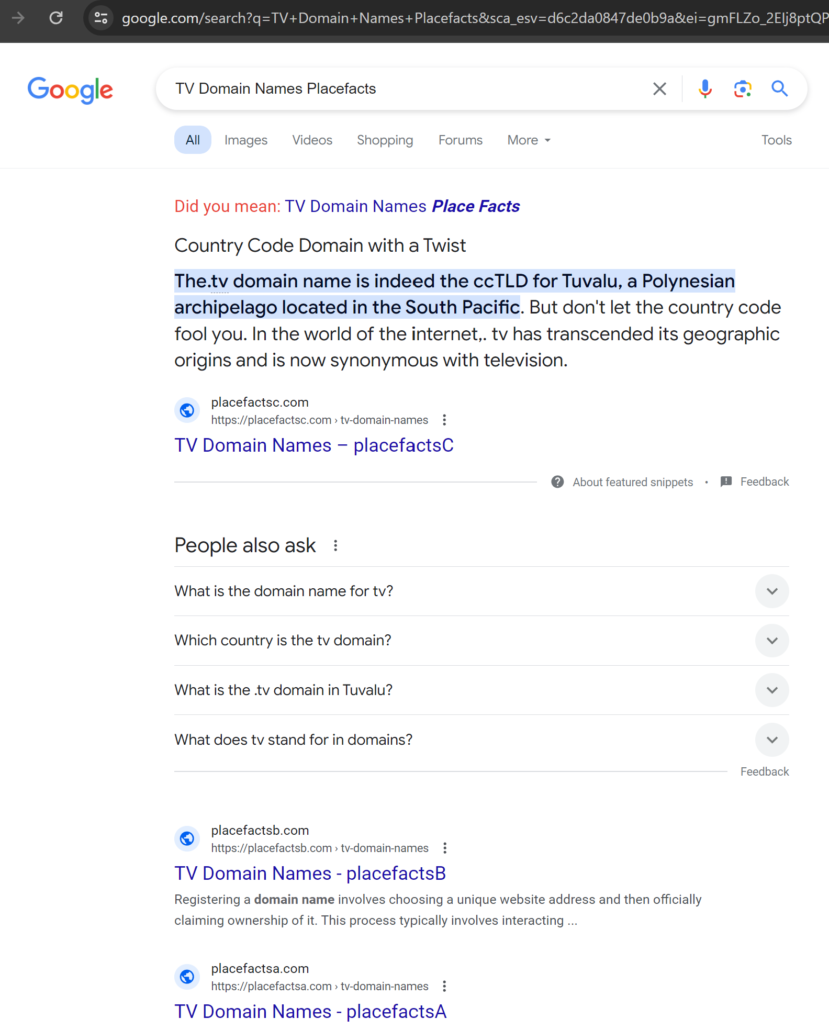

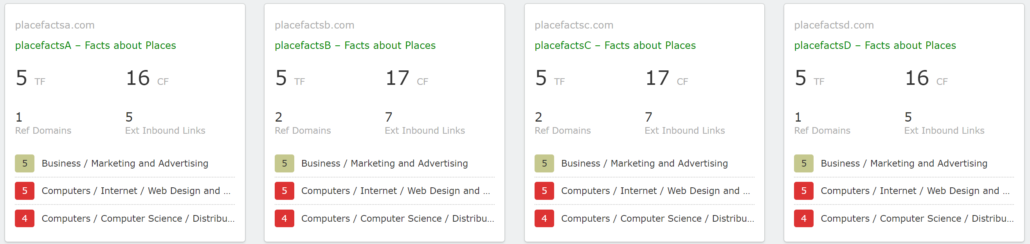

In a test that I ran, I compared four AI content tools. This test has been running since mid-July 2023 – so at the time of revealing the results (today), the test has been running for 10 months. I talked about the AI comparison study six weeks after launch on my personal blog because (having some initial 6 weeks of data) I wanted to create links that would allow Google to discover all 16 AI-generated pages used in the test. But I was careful at that time not to disclose the AI tools used in the study, to reduce the chances of contaminating the data.

We can now reveal everything, with 10+ months of data reinforcing our initial suspicions that we had after the first 6 weeks of testing.

In short, we tested four different content writing tools. We generated the same themed website with almost identical domain names for each one. On each site, we created four pages, each with unmodified content from the four tools, for the same four phrases, for a total of 16 pages.

The full details of the case study, including the actual domain names, are noted below so anyone can review the sites provided, but I want to highlight one specific finding…

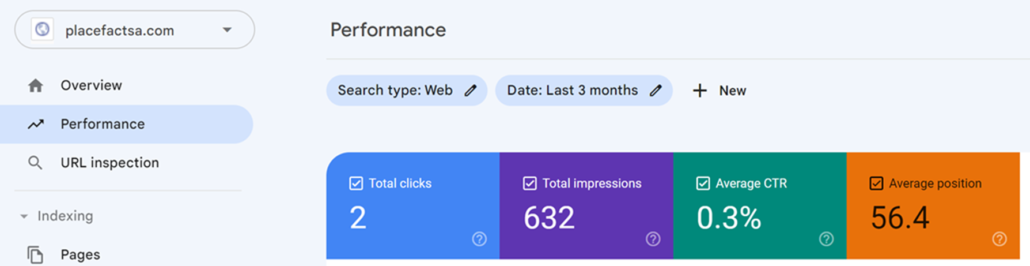

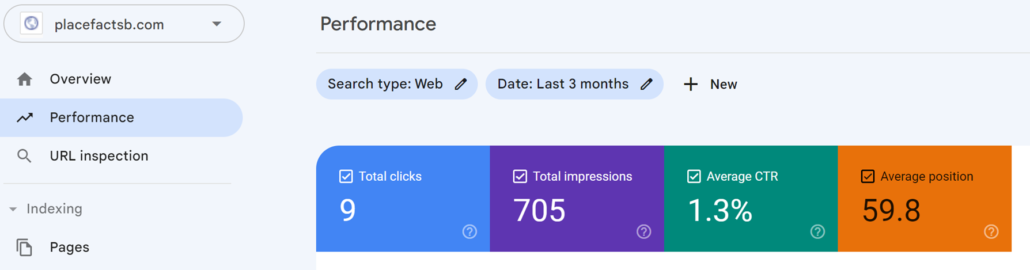

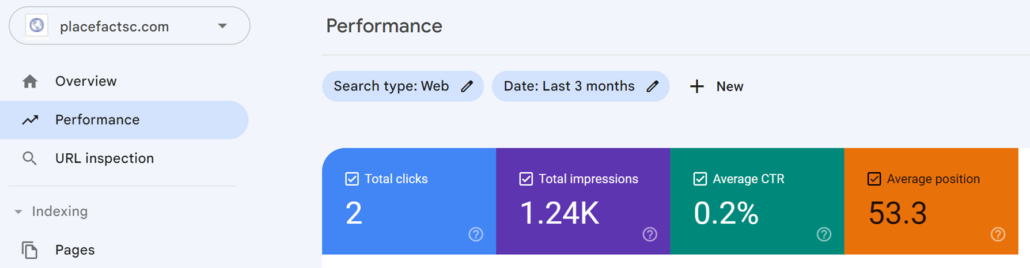

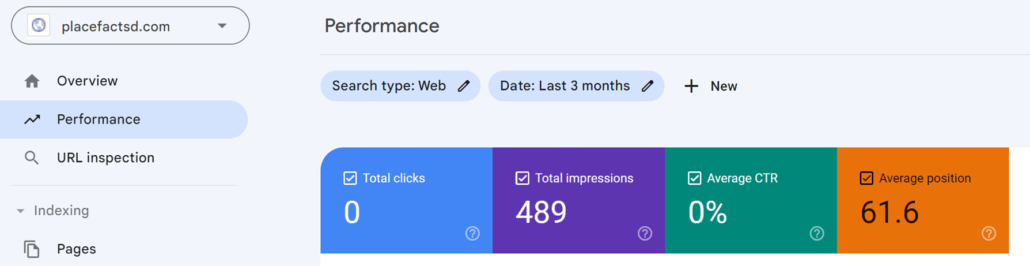

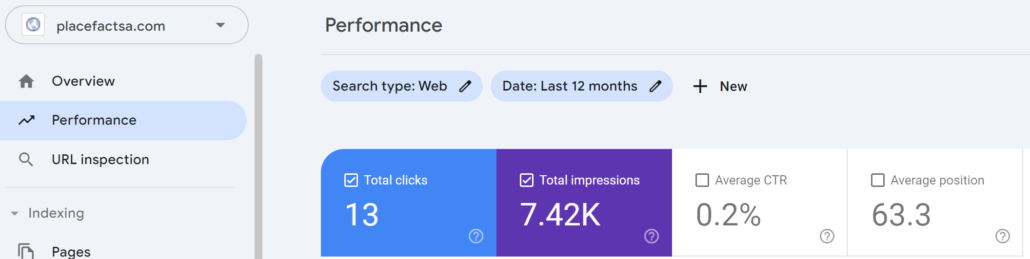

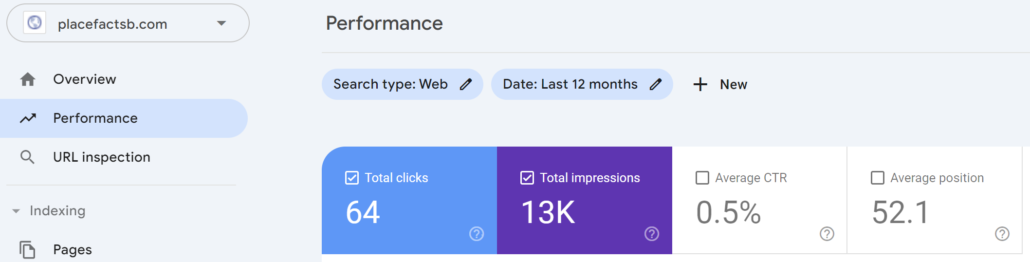

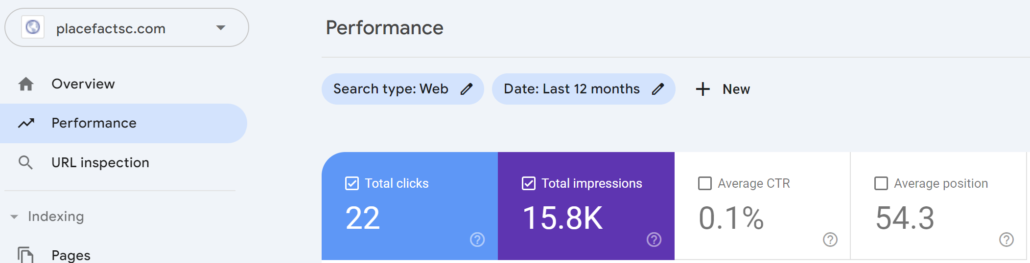

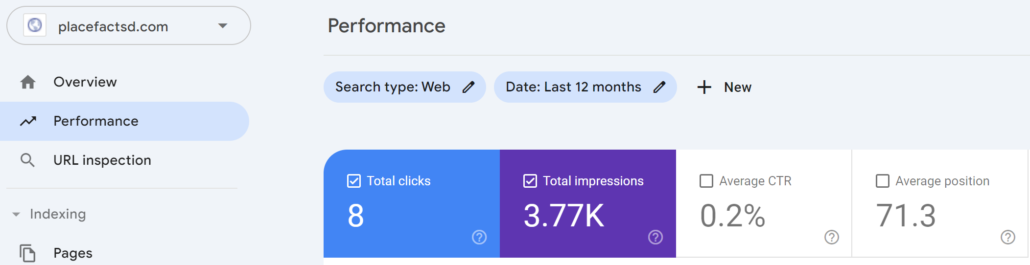

InLinks uses entities to generate content briefs for the AI tool to follow. InLinks only came SECOND in terms of impressions, but FIRST in terms of clicks. Specifically, the tool had a CTR of 0.5% whilst the next best scored only 0.2%. The tool with the most impressions (Surfer) only had a CTR of 0.1%.

Findings taken from Google Search Console

How LLMs generate content

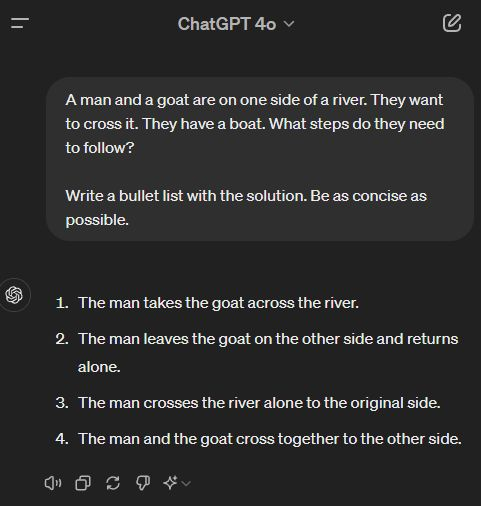

LLMs like ChatGPT do not use entities to generate text. They also do not use very much logic, either. The output of LLMs appears sensible because they string words together in patterns that we see in content all the time. They seek to recognize patterns and recreate them in their output. Sometimes, this can look very believable until you read the content. A good example of this was published recently by various sources (I am sorry, I cannot track down the originator) – which shows how an LLM drew inspiration from a popular IQ test but completely missed that the initial question was different. Just have a read:

This demonstrates that LLMs (even the newest ChatGPT 4o) string together text rather than solve a problem. Solving the problem is just a bonus if it happens.

How Search Engines Work Differently

As I have discussed many times before, ever since Google bought Metaweb in 2011 or so, they have been trying to look at the world’s information through entities (objects in a database) and see how these entities connect to each other. This means that good SEO content needs to not only cover all the right topics to solve a searcher’s query but also connect those ideas coherently.

Most AI generators do not do that. They do not have that added value layer.

The AI Tools we Tested

In my test, I used four tools. Please bear in mind that the text was generated on July 12, 2023 (so 10 months before this post). I imagine all tools (including InLinks) have improved their UX and features since then. Indeed, back then, ChatGPT 4.0 was not even publicly available. Now, it is baked into InLinks and, I assume, other tools by default.

Apart from InLinks, I chose two other tools that add a value layer for SEO – namely Frase.io and SurferSEO. I consider these tools to be serious competitors to InLinks and I want to make it clear that I have every respect for the makers of these tools. In particular, Surfer has a huge following. Much bigger than InLinks. I can only aspire to catch up with Michal and his team. But competition is what stimulates innovation. Then, I chose one of the “original” AI content creators for my fourth tool, Copy.ai.

| Website | Tool used |

|---|---|

| https://placefactsa.com | Frase.io |

| https://placefactsb.com | InLinks.com |

| https://placefactsc.com | Surfer SEO |

| https://placefactsd.com | copy.ai |

| https://placefactse.com | Not known! See below* |

Mitigating uncertainty

Few SEO tests are “sound”. But here are some of the things I did to try to avoid bias:

- All four sites had exactly the same WordPress setup and theme

- All four pages on each site had the same title for content generation

- All four sites were routed through Cloudflare CDN before being hosted on the same IP address

- Each page has a unique AI-generated picture, but all 16 pages used Dall-e.

- Nobody knew about the tests until 6 weeks in.

- Nobody knew which site was which tool until today.

The initial results tell half the story.

Using each Keyword & “Placefacts” in a Google search shows how each site is doing for each target phrase. Mostly, all four sites were on the first page of the SERPs, although it was interesting to note that one or two pages seemed to disappear for the target keyword. Even so, on each test, one of our four sites came to position 1 (as of 20th May 2024).

The initial results were InLinks:2, SurferSEO:2! A DRAW at this stage.

You can also compare the results for yourself!

You can also see how each site performs for each target phrase. All the sites are still live. Here are quick links to Google search results. Likely, you will all be looking from different countries. As soon as this post goes live, it is open to manipulation, but let me share the keywords and the Serp screenshots as I see them today, from a UK computer, using a Texas IP address, in incognito mode on Chrome (which did mean Google had to check if I was human!):

Pandanus Leaves: Win for Surfer.

Travel to Tuvalu: Win for InLinks

Tuvalu facts: Win for InLinks

TV Domain Names: Win for Surfer

The other half of the story shows a clear win for InLinks (and for Entity SEO)

Knowing the relative rankings based on the main keyword for each article IS interesting. But as many SEOs will tell you these days, Topic clustering is an important part of SEO. This is why I chose “Tuvalu” as the theme for all four pages of content—so that the pages could connect with a common theme.

In doing so, Surfer, Frase and InLinks, because of the way they all work, will collect information from the top ten websites ranking for the target phrases and use this to work out what to write. But the resulting content – if written “well” – should not only rank for the keyphrase listed but also for other queries.

In fact, if the content is well targeted to the user query in the first place, Google has done its job, and the click-through rate (CTR) should be much more important as a metric (to Google) than Impressions. For the site itself, especially monetized through adverts, clicks would be the main KPI.

Either way… after six weeks, the clues were there that the InLinks content had more traction. After 10 months, I think the results are conclusive. See for yourself.

The results after six weeks, as presented at Pubcon 2023

The Results after 10 months in the Wild

Clearly, good content can create more clicks from fewer impressions in the SERPs due to higher CTRs.

After 12 months, the back-link profiles for each site remain almost identical, so what makes the difference? The content!

Summary and Take-Aways

When I started to write this post, I wanted to make the point that LLMs and SERPs work differently simply, so if you write SEO-based content using AI, then you damn well better use a tool that uses entities to try and connect ideas to create an SEO-friendly content brief. Otherwise, the AI generator will miss the point. Then, as I started to write, I realized that I had this test running for the last 10 months to make this very point!

None of the tools create world-beating results with AI content alone. The sites only received small and measured internal links and no technical SEO. Even so – none of the AI-generated pages seem to have been blocked by Google.

But if you want to do better than anyone else, using AI-based tools for SEO, then an entity-based approach is, in our view, a stronger place to start. InLinks is such a tool, and you should probably try it now.

I usually end with a call to action that you should click on. Today, I would rather you engage in “Brain 2.0” and think about the workflow you currently have for content generation. Whether you use AI or not for the final mile, consider using InLinks to create the content brief.

This is a very interesting study. As usual, Dixon, I am impressed with your approach and honesty/transparency. As someone very interested in content tuning, this fascinates me.

Thanks Jordan.

Leave a Reply

Want to join the discussion?Feel free to contribute!