How to do an Internal Link Audit for SEO

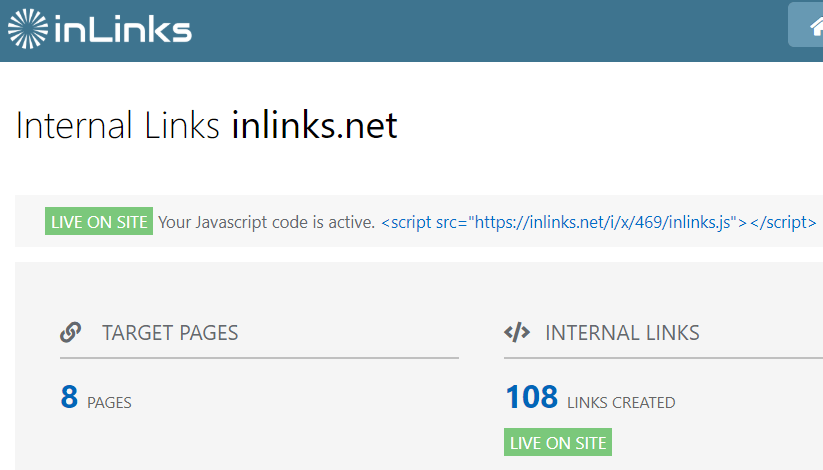

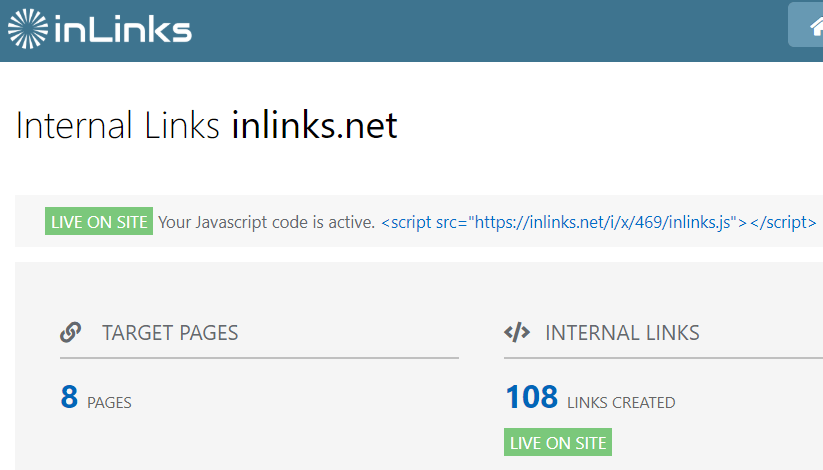

A well-crafted internal link structure improves your chances of your content being seen. At the

A well-crafted internal link structure improves your chances of your content being seen. At the

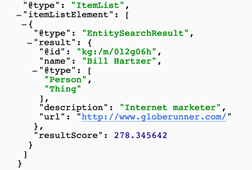

You can define your own entities on your own web pages. When a search engine

Creating Digital Assets Whether you have decided that your strategy is to be the entity,

This is much harder than it sounds, mostly because businesses do not entirely agree with

Being the Entity If you are a business or organisation, then you ARE an entity.

Become an entity or an expert on an entity Your first strategic decision is whether

Getting a Wikipedia entry is fraught with dangers. Inlinks has chosen not to list a

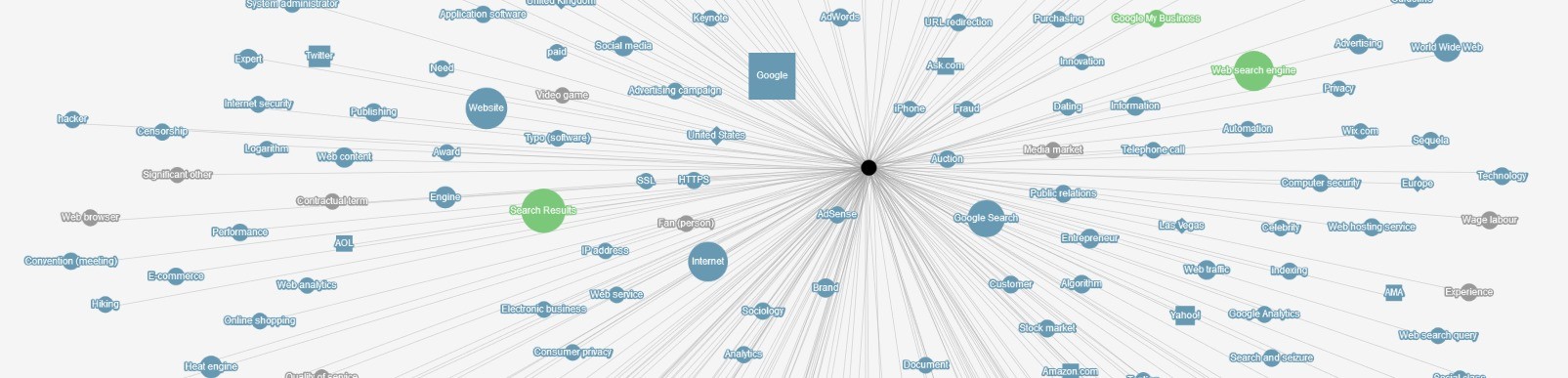

Google’s Knowledge Graph is built upon Entities, primarily – but not exclusively – derived from

Know what is an Entity (and what isn’t ) Just as you can type in

This is not the first book or content you should ever read on SEO. There

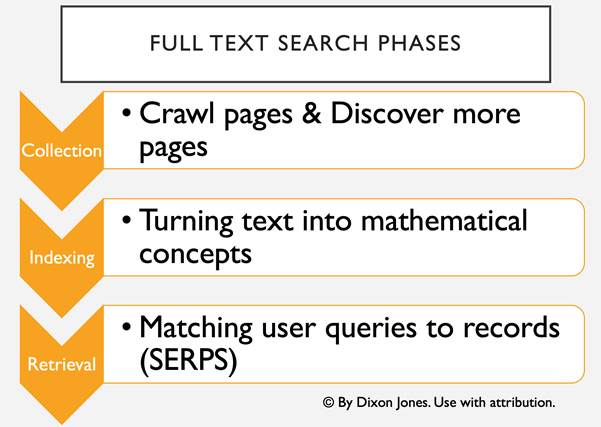

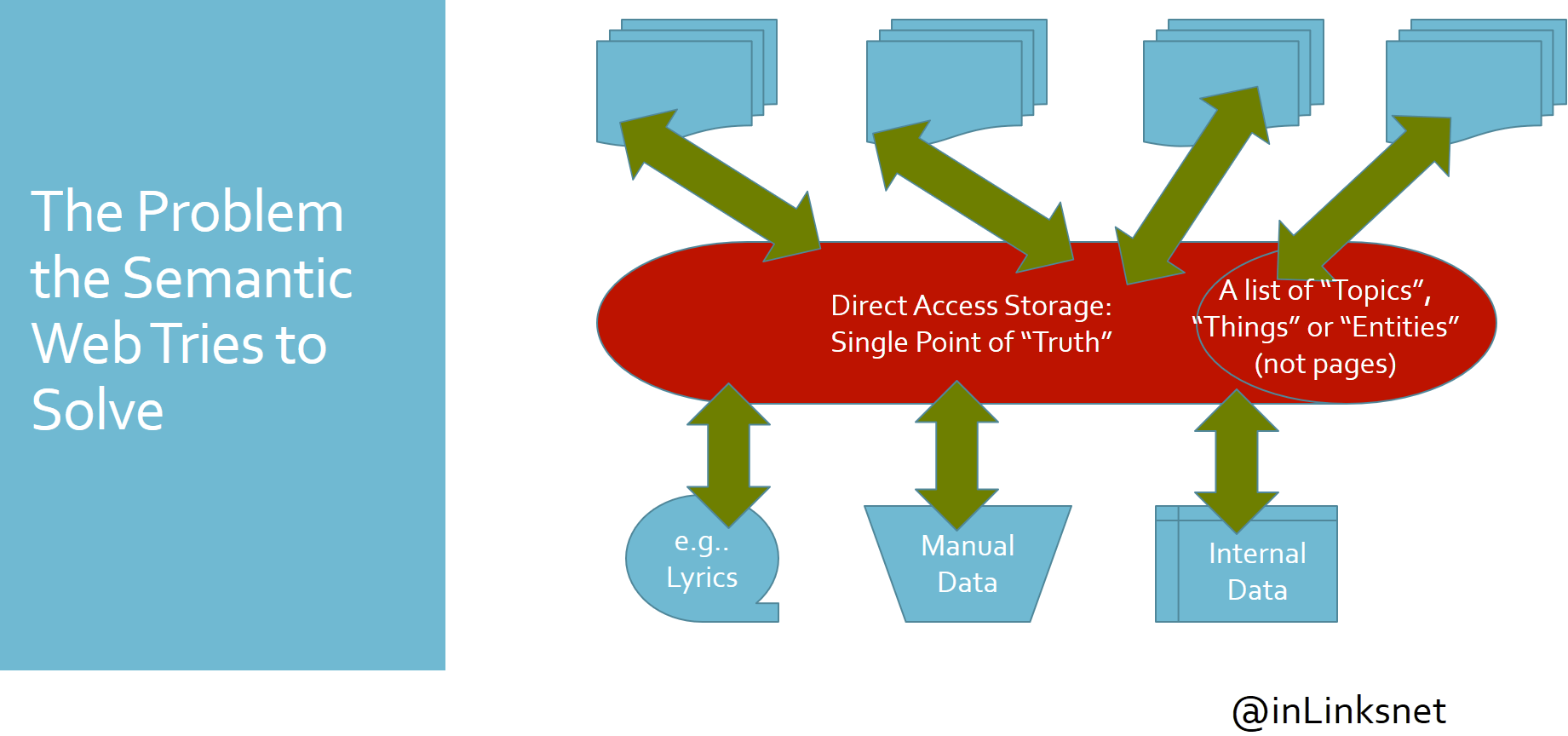

Summary: Modern Search Engines can derive insights across multiple documents instantly. Cataloguing systems over the

What (exactly) IS Google Knowledge Graph? Google Knowledge Graph is technically a knowledge base containing

This Semantic Search Guide shows SEOs how Information Retrieval systems have moved from a web

Give us a call or drop us a message using the blue chat icon.

You may also Request a Demo

InLinks UK | Dixon JonesThis site uses cookies. By continuing to browse the site, you are agreeing to our use of cookies.

Accept settingsHide notification onlySettingsWe may request cookies to be set on your device. We use cookies to let us know when you visit our websites, how you interact with us, to enrich your user experience, and to customize your relationship with our website.

Click on the different category headings to find out more. You can also change some of your preferences. Note that blocking some types of cookies may impact your experience on our websites and the services we are able to offer.

These cookies are strictly necessary to provide you with services available through our website and to use some of its features.

Because these cookies are strictly necessary to deliver the website, refusing them will have impact how our site functions. You always can block or delete cookies by changing your browser settings and force blocking all cookies on this website. But this will always prompt you to accept/refuse cookies when revisiting our site.

We fully respect if you want to refuse cookies but to avoid asking you again and again kindly allow us to store a cookie for that. You are free to opt out any time or opt in for other cookies to get a better experience. If you refuse cookies we will remove all set cookies in our domain.

We provide you with a list of stored cookies on your computer in our domain so you can check what we stored. Due to security reasons we are not able to show or modify cookies from other domains. You can check these in your browser security settings.

We also use different external services like Google Webfonts, Google Maps, and external Video providers. Since these providers may collect personal data like your IP address we allow you to block them here. Please be aware that this might heavily reduce the functionality and appearance of our site. Changes will take effect once you reload the page.

Google Webfont Settings:

Google Map Settings:

Google reCaptcha Settings:

Vimeo and Youtube video embeds: